I write spells (programs) that direct mana (electricity) through runes (logic gates) to conjure illusions (display furries on my screen)

And all of it is done through magical focuses (hardware) created out of transmuted stone (silicon) and metals.

It’s a magic crystal ball.

I keep conjuring these boring niche illusions because these weird townsfolk give me money every couple weeks as long as I keep doing it.

Hey, if it pays for the orgies, all is well!

Source - The Dragon (Dragon magazine) #10, Page 5 - https://www.annarchive.com/files/Drmg010.pdf

are you actually working on a project that displays furries on your screen right now? cause if so I am too and I wanna compare notes

You might like Ra by Sam Hughes

EE major here. All the equations in the third panel are classical electrodynamics. To explain the semiconductors needed to make the switches to make the gates in the second picture, you really need quantum mechanics. You can get away with “fudged” classical mechanics for approximate calculations, but diodes and transistors are bona fide quantum mechanical devices.

But it’s also magic lol.

Quantum Physics Postdoc here. Although technically correct this is also somewhat misleading. You need the band structure of solids, which is due to quantization and Pauli exclusion principle. The same quantum mechanics that explains why we did those strange electron energy levels for atoms in highschool. The majority of quantum mechanics, however, is not required: coherence, spin, entanglement, superposition. In the field we describe semiconductors as quantum 1.0, and devices that use entanglement and superposition (i.e. a quantum computer) as quantum 2.0, and smear everything else in-between. This

It’s the 1000 Lv. boss magic counter attack against the 100 Lv surprise attack, the complexity is just to much for our mind

Quantum Physics Postdoc here.

Can we trade?

Great write-up btw.

Can we trade?

Oh my sweet summer child, a 100x yes, if only it were possible.

But more seriously, if you’re doing EE, the world of quantum is your oyster. Specialize in RF/MW design and implementation, we use it for qubit control, and you’ll be highly valuable.

This what?

Oh no! The [clever quantum mechanics joke] got him!

[radioactive decay triggered the poison gas?]

[Quantum hype train?]

[Imposter syndrome?]

Schrödinger opened the box 😱

Interesting. Does tunneling fall under 1.0 or 2.0? Isn’t it considered a property of classical electrical engineering?

Good question. It would be application specific. I think evanescencnt wave coupling in EM radiation is considered " very classical" (whatever that actually means). But utilizing wave particle duality for tunneling devices is past quantum 1.0 (1.5 maybe?). However, superconductivity tunneling in Josephson junctions in a SQUID is closer to quantum 1.0, but 2.0 if used to generate entangled states for superconducting qbits for quantum computing.

Clear as mud right?

It is now that I’ve looked up the different types of tunneling you mentioned. I didn’t know there were multiple types of tunneling before now.

Thanks for the informative reply and prompting me to do some reading!

You’re talking the old CPU designs, not the current ones fighting tunneling effects or the work-in-progress photonics & 2D hybrids.

No, I am not sure that I am.

Photonic processing, whilst very cool and super exciting, is not a quantum thing… Maxwells equations are exceedingly classical.

As for the rest it’s transistor design optimisation, enabled predominantly by materials science and ASMLs EUV tech I guess:), but still exploits the same underlying ‘quantum 1.0’ physics.

Spintronics (which could be what you mean by 2D) is for sure in-between (1.5?), leveraging spin for low energy compute.

Quantum 2.0 is systems exploiting entanglement and superposition - i.e. qubits in a QPU (and a few quantum sensing applications).

Yes. You need specifically the condensed matter models, that have the added bonus of the equations making little sense without some interesting lattice designs at their side.

Semiconductors specifically have the extra nice feature of depending on time-dependent values, that everybody just hand-waves away and use time-independent ones because it’s too much magic.

Hmmm, so all computers are technically quantum computers?

Not at all. In a classical computer, the memory is based on bits of information which can either[1] in state 0 or state 1, and (assuming everything has been designed correctly) won’t exist in any other state than the allowed ones. Most classical computers work with binary.

In quantum computing, the memory is based on qubits, which are two-level quantum systems. A qubit can take any linear combination of quantum states |0⟩ and |1⟩. In quantum systems, linear combinations such as Ψ = α|0⟩ + β|1⟩ can use complex coefficients (α and β). Since α = |α|e^jθ is valid for any complex number, this indicates that quantum computing allows bits to have a phase with respect to each other. Geometrically, each bit of memory can “live” anywhere on the Bloch sphere, with |0⟩ at the “south pole” and |1⟩ at the “north pole”.

Quantum computing requires a whole new set of gates, and there’s issues with coherence that I frankly don’t 100% understand yet. And qubits are a whole lot harder to make than classical bits. But if we can find a way to make qubits available to everyone like classical bits are, then we’ll be able to get a lot more computing power.

The hardware works due to a quantum mechanical effect, but it is not “quantum” hardware because it doesn’t implement a two-bit quantum system.

[1] Classical computers can be designed with N-ary digit memory (for example, trinary can take states 0, 1, and 2), but binary is easier to design for.

then we’ll be able to get a lot more computing power.

I think that’s not quite true it depends on what you want to calculate. Some problems have more efficient algorithms for quantum computing (famously breaking RSA and other crypto algorithms). But something like a matrix multiplication probably won’t benefit.

It’s actually expected that matrix inversion will see a polynomial increase in speed, but with all the overhead of quantum computing, we only really get excited about exponential speedups such as in RSA decryption.

Classical computers compute using 0s and 1s which refer to something physical like voltage levels of 0v or 3.3v respectively. Quantum computers also compute using 0s and 1s that also refers to something physical, like the spin of an electron which can only be up or down. Although these qubits differ because with a classical bit, there is just one thing to “look at” (called “observables”) if you want to know its value. If I want to know the voltage level is 0 or 1 I can just take out my multimeter and check. There is just one single observable.

With a qubit, there are actually three observables: σx, σy, and σz. You can think of a qubit like a sphere where you can measure it along its x, y, or z axis. These often correspond in real life to real rotations, for example, you can measure electron spin using something called Stern-Gerlach apparatus and you can measure a different axis by physically rotating the whole apparatus.

How can a single 0 or 1 be associated with three different observables? Well, the qubit can only have a single 0 or 1 at a time, so, let’s say, you measure its value on the z-axis, so you measure σz, and you get 0 or 1, then the qubit ceases to have values for σx or σy. They just don’t exist anymore. If you then go measure, let’s say, σx, then you will get something entirely random, and then the value for σz will cease to exist. So it can only hold one bit of information at a time, but measuring it on a different axis will “interfere” with that information.

It’s thus not possible to actually know the values for all the different observables because only one exists at a time, but you can also use them in logic gates where one depends on an axis with no value. For example, if you measure a qubit on the σz axis, you can then pass it through a logic gate where it will flip a second qubit or not flip it because on whether or not σx is 0 or 1. Of course, if you measured σz, then σx has no value, so you can’t say whether or not it will flip the other qubit, but you can say that they would be correlated with one another (if σx is 0 then it will not flip it, if it is 1 then it will, and thus they are related to one another). This is basically what entanglement is.

Because you cannot know the outcome when you have certain interactions like this, you can only model the system probabilistically based on the information you do know, and because measuring qubits on one axis erases its value on all others, then some information you know about the system can interfere with (cancel out) other information you know about it. Waves also can interfere with each other, and so oddly enough, it turns out you can model how your predictions of the system evolve over the computation using a wave function which then can be used to derive a probability distribution of the results.

What is even more interesting is that if you have a system like this where you have to model it using a wave function, it turns out it can in principle execute certain algorithms exponentially faster than classical computers. So they are definitely nowhere near the same as classical computers. Their complexity scales up exponentially when trying to simulate quantum computers on a classical computer. Every additional qubit doubles the complexity, and thus it becomes really difficult to even simulate small numbers of qubits. I built my own simulator in C and it uses 45 gigabytes of RAM to simulate just 16. I think the world record is literally only like 56.

No. There are mechanical computers, based on mechanical moving parts, but they have been outdated since 1960.

If you don’t think “it’s magic” after emag theory… At a certain point the math does start to read like spell craft.

“Yes, yes, but what ratio of turns do we need in the transformer?”

Transistors are basically magic, when you get down to the chemistry of them. But when you get up the “current here means current out there” part it becomes understandable again. Then you get to logic gates, and maybe up to basic addition and other operations. And all understanding disappears when you get to programmable circuits which run on pure magic once again.

And if you put the 200 layers of abstractions, indirections and quirks on top the transistors, it’s magic again.

I was a signal integrity engineer so emag theory was my job for a while.

Honestly, magic is quite right. At the base level, how these fields are created and how electrons moving around results in these rules is just the magic of how our universe works.

You can discover the rules to live by, but why it works that way gets smaller and smaller until it’s magic.

To a certain extent, it actually is magic. We still don’t fully understand the “why” of magnetism, just the “how”

Yeah sure but then if you dig down into the standard model of particle physics and quantum field theory to get the REAL answers, you learn that this phone I’m trying not to drop on my face is just a bunch of energy field waves kind of intersecting and coupling in just the right way and… shit nevermind. It’s magic all the way down.

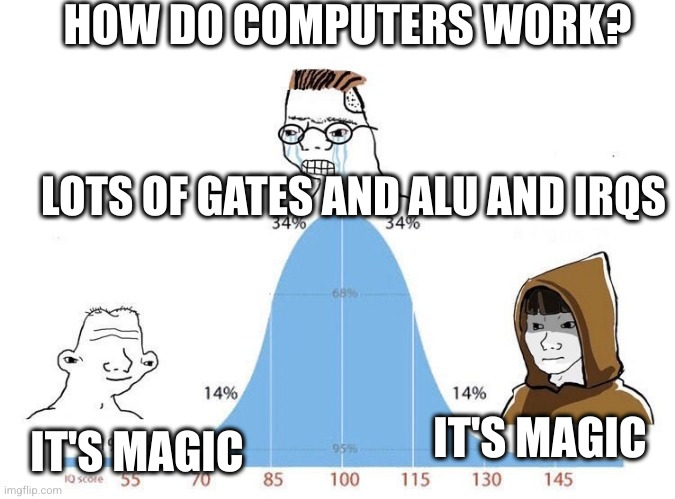

It’s far more complicated than the last panel makes it out to be.

Ah if only it was a 2 instead of 3 in your name…

It’s magic, and we are enchanting engraved runes.

Photolithography machines are basically laser printers. So we’re printing these SoCs.

I feel like there is a pretty big gap between understanding how logic gates and truth tables work and understanding the underlying physics of how modern processors work.

So crowdstrike was really the digital wizard equivalent of trying to cast “protectum de rectum” and accidentally casting “shid pants” combined with “butt crackus impenetrous”?

Now it all makes sense!

You don’t really have to be a computer expert to know about something similar to the second one. If you’ve ever read a bit about computers (even just on wikipedia or something), you can easily have a concept similar to what the second seems to be in your head when you think about computers.

The third one doesn’t exactly try to give much impression at all on how computers work. It has some physics stuff which a lot of well educated people would have seen, not just computer scientists.

I do think kids should be taught quite a lot more about computers at a young age though, at least compared to what I was. At my high school, we did like one project in Scratch, which I just found annoying, partly because I hated using Scratch and was not taught anything in a proper coding language. I went to quite a shit high school though anyway really.

Kids who can do early maths classes should easily be able to be taught to type in a function that calculates something like a factorial. If you know some incredibly simple mechanics, it’s also very easy to code something like a ball just falling off the screen. Most kids past a certain age have heard something like “computers process numbers”.

I think the difference between the first and second is whether you have a deep understanding of how high level languages translate into hardware operations. If you’re a novice how that translation works might as well be magic.

The second panel understands how that translation exactly happens and then it absolutely makes sense.

The third one is the next step where you have an deep understanding how the underlying physical phenomen makes computers work, and again that might as well be magic because explaining it is like explaining magic.

Yeah. I’m currently at the beginning of the second stage, knowing boolean algebra, logic gates and dibbling into parsers and language design, however most of that physics looks like magic to me.

To go on a bit about what I think a computer could look like, how physicists and electrical engineers talk about exactly what computers do physically in a real CPU is not needed to think about computers in less of a “magical” way.

Kids might also have heard people say “an abacus is the simplest computer” or “the pocket calculator you use in your maths class is a particularly simple example of a real computer”.

You can make a kind of “computer” just by putting a bunch of switches or levers in a row and manually flicking them on and off to run simple bytecode I believe. Computers are just as much, if not more, about mathematical abstraction than they are about physical abstraction and engineering.

All you really need to make a kind of computer is things laid out in front of you that can be in a particular state (either 0 or 1). You could write a few 0s and 1s on a bit of paper or do the same thing with on/off switches. Then all you need is to decide some rule where you look at what the states are and it allows you to change them or write out the new states.

I believe it should even be possible, especially if you are still manually changing the states, to make something that looks very similar to a basic pocket calculator using this as a proof of concept.

You could try making a low resolution screen out of a grid of square bits of paper or cardboard that are different colours on each side and spin around like when there’s a secret room in a mystery novel. Those are basically pixels that are on or off, making a black and white terminal.

Displaying digits and the symbols like + and - by turning these pixels on and off should also be possible but could be a bit of a pain. In fact, you might have noticed some of the simplest pocket calculators don’t even bother making a screen as complicated or versatile as what I’m describing, because they don’t need to.

The point is that when you can display numbers and symbols on your screen based on how the long line of levers are set up, and essentially do addition, the only other thing you need is user input.

“User input” here is kinda tricky. If you actually made this computer and did it by manually changing the levers, you don’t necessarily care since you have such an intricate understanding of this computer and can just change around the other levers describing what the screen should look like and what numbers are stored, and I’m not gonna say too much about trying to make buttons. You could just press a button and then store that the button was pressed during that cycle of executing your kind of bytecode. It would be pointless if you were manually flipping all the levers anyway though.

If you were following and most of what I said seemed right, you can hopefully see that you’ve hypothetically built something like a pocket calculator. You’re probably not actually unironically doing all the steps and making a proper computer if you’re reading this, but hopefully this computer has been built up as more of a mathematical object that changes state based on certain rules.

Anybody who isn’t a computer genius would probably cry (or at least tell someone to fuck off) if they were asked to correctly run through every step to put in 2+2, have this calculator work out the result, then display it on the screen.

You can imagine a pissed off “IT worker” going through and actually doing all of this though. This can be compared to someone who’s skilled with an abacus.

But the abacus person would be way faster. It would be no competition. That’s because your silly excuse for a computer has a CPU frequency of the reciprocal of whatever unimaginably tedious period of time it takes your “IT worker” to figure out what they’re even supposed to be doing in this crazy job and do all the steps correctly.

So at this point I think at least I have some idea on what a computer is. You can think about it as just being a CPU that’s usually somehow connected to an input and a display where a bunch of levers (or something else) move around and it does calculations. Every calculator is arguably a computer.

A computer is a kind of mathematical concept of a state machine that does calculations by constantly changing state.

I think a computer is more of a concept though. It doesnt even have to actually exist. If you can do binary arithmetic in your head by thinking of some binary numbers and trying to add them or move them around based on certain rules, you’re creating a very simple computer in your own mind.

People are sort of computers. Binary numbers are obviously analogous to base 10 or whatever else. If you can do a basic calculation that isn’t by literally dragging little bits of food together like an infant, then you’re using some kind of computer. That should be especially clear if you try to remember numbers to use in future calculations. You might even remember certain values in your own head like pi. Look up the Boltzmann brain if you want to as well.

A lot of people don’t like the idea people are computers though, and I am in no way convinced everyone is an automaton. People could just have a computer sometimes running in their head. Also the mind seems to go beyond being just a concept itself, even though computers can be used to write computer-based procedures (sets of instructions).

Getting back to the point, the original calculators were workers in offices who used abacuses. That was just the word for them.

Alan Turing invented computers because he wanted to solve the enigma problem and decode what the Nazis were saying. I don’t know whether he imagined the stuff people would be doing with computers now.

There’s one more thing I wanted to say that you may already have realised: computers can do a lot more than be calculators. They can use a set of mathematical steps (a program) to decide what to do or display. If you made your screen out of a grid of spinning card like I said before (and you had enough levers), there’s nothing stopping you from having more complicated rules describing how to make a text terminal or run Doom. An easier example would be a computer that does absolutely nothing except run the original Pong game. Very old consoles that didn’t even have any kind of boot menu were like this.

Everything else we do on computers nowadays should be possible if you’re clever enough and work it all out (though going beyond a certain point would be very tedious and not very useful to anyone). Also if you wanted something like wifi, you’d also need to figure out how to connect it to some kind of radio.

The first step in going a lot further, though, would be to make a full text terminal rendering letters and try to implement your own version of assembly language you could convert to bytecode.

Anyway, I am not a computer expert at all and have 0 computer science qualifications, but that’s how I understand computers. Feel free to correct me or tell me if you read it all, you fully agree and you are a computer expert. That description may have lacked some details but hopefully I built up the concept of a computer and didn’t make any serious mistakes.

I don’t really care about every single detail of how tiny computer chips work in real life, at least to have a concept of what a computer is.

TL;DR: I think of computers more as calculators, think that explains most of what they do, and haven’t spent countless years studying how computers work. I’ve never even done this but I can explain the idea behind creating a fully fledged computer in minecraft or something like that (wiring systems make some of this a lot less manual).

The simplest programmable Turing complete system is the rule 110 automaton.

The ideas behind making a general purpose modern computer like we have today from there are not hugely difficult to get some intuition for.

If you wanna get only actually slightly more complicated than everything I explained there about how I see computers, you can switch gears to imagining actually trying to create a Minecraft computer you can code and play computer games on.

Having a wiring system like redstone could automate all the logic of how the states change if you were clever enough with how you designed it. You could also design a similar, more mechanical computer using switches that actually flip on and off based on an initial state and go from there in real life. A computer scientist from the future in a society that do very little, if anything, with electricity like we have today could actually make a computer like this over a long course of time and wow the people of the past before computers got invented.

Turing was actually just solving a maths problem, so any kind of invention that can be made to solve the same kind of problem (especially in an automated way) was a device essentially equivalent to Turing’s computer.

You could design a computer in Minecraft (or in real life), that actually just had switches or buttons for user input, with all the other switches being hidden somewhere else and flipping automatically in complicated ways to activate or deactivate pixels on a screen. This would be way faster than the “Information Technology worker” (who would need a lot of specialist knowledge and skills about how the computer was built) from before, and you could much more easily make a general purpose computer from there.

That more mechanical or Minecraft-y computer is only in theory a little bit more sophiscated (since it follows the same basic rules anyway), but can be made to do anything we do on computers today by building up enough coding. Someone with enough technical knowledge could write their own full compiler tower for a computer/CPU architecture and go all the way to writing high level interpreters so their machine can run modern Python code.

In the early days of computers, when some very technically skilled people had access to computers they could write assembly on, the only next step would be to write a general purpose operating system (instead of just having one set of instructions to do a particular thing), which is easier than it sounds for a handful of experts across the world.

A general purpose computer with an operating system in the early days would have started up to a command prompt where you could start processes in the shell that the kernel would store values from and flip between while the switches were going crazy and making flipping (or probably a lot of whirring) noises.

You could write and run any program on that kind of computer with a general purpose OS, and the operating systems we use today were written by teams of very clever people only a few decades in the past who were quick to think about this.

Programs stored on your computer could be ran from the shell, and you could easily write your own simple ones to do more complicated calculations like factorials. You could start trying to do things like give a very precise estimate for pi based on a mathematical series by writing a computer program. Again, computers are basically calculators and any kind of calculator you can find that makes you not have to manually add stuff on bits of paper is arguably a kind of computer.

These computers were the best kind of calculators ever invented, though. They were a cutting edge technology that a kind of scientist with coding skills could use to run complicated calculations. This was the start of “computer labs” and “computer scientists” that were glorified coders, but not glorified engineers.

If we go back to what this kind of computer looks like internally, a computer lab was only actually one computer. The computer was made from huge stacks of towers of circuits that were connected and did different things. The computer would have rows of different early keyboards with outdated cables paired with old screens that only displayed monochrome text to people sat at desks doing maths.

If you were an early computer scientist and what you cared about was doing mathematical and scientific calculations, this is what computing was like in around the 70s (I think).

There weren’t any videogames or consoles that anyone cared about. A pong console or Atari were available around this time, but very few if any people actually enjoyed playing them.

If you’ve ever seen the Angry Video Game Nerd, or AVGN, (especially his early videos) on Youtube, he introduced these kinds of computers to a far younger generation. His videos show what it’s like to play games on them. Kids with more advanced consoles would watch him to see what playing videogames was originally like.

AVGN amused the vast majority of people in his target audience just by showing these games and cussing at them while trying to play. The videos were in a way set in the 70s, and his humour in the early AVGN videos was about complaining about gaming in the 70s and early 80s. He’s a collector of old computers who acts out what it would be like to live in a time where that was your computer. You could even think of his persona as being a computer scientist who only had digital videogame machines (a limited form of computer) and couldn’t do anything he wanted on them.

But for all the computer scientists had, computer labs had no graphics. Early consoles had graphics. The advantage of a computer scientist’s computer was that you could write out calculations to do and make your own programs.

Most consoles AVGN shows from around the 70s or early 80s were not general purpose computers at all. They didn’t run an operating system or kernel, really.

From there, we can get to a “modern computer” that “feels like magic” easily. You could do the same things even if your computer was huge as you could on an actual modern laptop. It would be way slower because of the less intricate and fast-running circuitry, but the modern fully general purpose computer that does almost anything you want it to is quite trivial to make from this point given the time.

What we actually want is a general purpose computer that’s almost just like what we have today. It should be able to program stuff AND render graphics. How this actually happened in computing history started with Unix, and parts of Unix are still a standard on almost every computer used today that’s hard to ever change.

So how the frick do computers work then?

Computers work by building up mathematical concepts so you don’t need to remember every simpler one in all its detail. They manipulate numbers at a tiny scale compared to their size (at least for complex computers).

You should also consider the fact that if a scientist or mathematician had actually completely forgotten how to divide a number by another and the details of what it means, they could still easily put an equation involving division into a computer and work it out, then use the result for future calculations. Computers allow you to not have to constantly think about simpler concepts, while still building up more detailed ones.

I think the reason so many people are so perplexed by computers is that the reason they are so incredibly useful is because they allow you to forget how they work. They help you forget how they work. You never need to learn how a computer works in detail. Operating modern computers is very easy. When you watch a video online, are you constantly thinking about the network and how the video is rendering? When writing code in a highly abstract coding language like Python, you don’t need to worry about issues you may have in C, so you can write a program that achieves your goal with much less thinking needed.

I think it would be hard to disagree with me when I say that computers are the most powerful tool humanity has ever had for developing new technologies. That’s why World War 2 or any older time period looks so different to today. Even though they may have had primitive versions of much of the tech we use now, computers stopped people from having to think about every intricate detail at once. That’s why most technical exams do allow you to bring in your own personal computer, a pocket calculator. Computers help to remove unnecessary thinking so you can further knowledge overall.

What would I actually draw on the whiteboard to answer the question? Only two things: a very short phrase and a simple diagram.

A computer is a Turing machine. That’s how it works.

My diagram would be a practically uncountable number of tiny switches that connect to an input and a screen (and each other, in far more complicated ways). The switches are constantly changing as the whole computer changes state. Input switches are linked to the switches doing calculations or moving numbers to storage switches, and there are a special group of switches connected to pixels on the screen to turn them on and off.

AT&T, a US telephone company, hired some experts to go and do almost everything in computing history again from scratch, because they wanted their own computing lab for processing data at their company. The experts working for AT&T actually did it all, and they used a much cleverer method than anyone before them.

One of their employees (Dennis Ritchie, who it could be argued invented modern home computers) came up with a way to make creating an operating system easier. Instead of writing it all in assembly code like everyone else did in order to make their more primitive computers that just ran a simple kernel you could execute a few intricately coded commands in, or one game stored on a ROM cartridge, he started by making an abstraction on top of assembly code.

Dennis Ritchie used something like assembly code, or in reality possibly old punchcards, which manipulated the levers in the device most directly, to write another programming language first that could be converted into assembly. The AT&T workers wrote their operating system in that, because it was better in a lot of ways.

As long as Dennis Ritchie’s compiler was working properly, nobody at AT&T had to worry about levers anymore. This was huge when it came to making coding easy. It certainly doesn’t take a genius to write a program in C that runs a few instructions to do a calculation. Writing a complicated operating system in C involved a lot less thinking than other people would need at the time, though you could insert assembly if needed, and using a computer (lab) was still quite hard.

Some people at the time said Unix should be slower than a computer that’s hardcoded in assembly to run a particular set of instructions. That should be true, but C was very heavily optimised and a lot of people were worse at assembly or punchcards than Ritchie, so it was still quite fast.

C programs like Unix were also easy to emulate on other systems. You just needed to make your own C compiler (a program) that could get C code into your computer’s bytecode. You could also rewrite it in C afterwards, so features could be added to the compiler or it could be better optimised by using more efficient algorithms.

Unix was arguably the first proper modern OS. If your computer (lab) could run Unix, it could do anything we can do today with more coding. Graphics could be written with C into Unix. Eventually, modern operating systems would just boot into a graphical screen asking for a password. That’s all not much extra abstraction on top of the computers they had already.

If you read all this or knew some of this operating system/kernel stuff before, you might be wondering where Unix went. Basically, everything we use today on our computers as the operating system that’s always running and decides stuff like what’s in the levers corresponding to RAM is almost identical to Unix.

Unix only stopped being a thing because it had licensing restrictions from AT&T and other groups put together operating systems with kernels that were made from the same starting point and with very similar intentions to Unix.

The Linux kernel is supposed to be almost an exact clone of the Unix one, although Linus Torvalds did not know what Ritchie originally wrote in his version of the Unix kernel. Linux and the programs standard in its shell could be seen as a very direct continuation of what Unix was. Linux is a clone of Unix.

How Unix worked is basically how all the impressive computers everyone walks around with work today and have for a long time.

As a side note before I get fully back on topic and wrap up (it’s fine to skip this if you just wanna know how I imagine computers work), there’s one other major figure who many see to be just as important as Torvalds to free and open source software. Richard Stallman has what many see as his own strange moral code he made up himself that he constantly preaches. At the time of early computers, he became strongly aware of the fact that anything written in C that was compiled to a state of levers (bytecode) to be ran on a computer was very difficult to understand for a human brain.

Programs you can run are just a long series of 0s and 1s and nobody except computer geniuses can figure out what they do. Even if you are a computer genius, decoding what all the levers do to each other in a complex program is a very daunting task. Stallman believes everyone across the world should only be trusting and executing code where you could obtain and study the human written code (a much stricter version of never running spiderman.exe from a dodgy site). He thinks you shouldn’t trust anyone, especially including companies, who won’t give a full version of a program’s source code for you to study, modify and show other people. He has given many talks and written many articles about this topic, which he’s done a lot more than coding for a long time.

He also put together the first fully free and open source C compiler, which is widely used today (among a few other Unix tools he rewrote), with the help of many volunteer coders who believed in his cause. You may also argue he and his supporters are the reason why almost all programming languages nowadays are actually free and open source. This is why a small proportion of people across the world think it’s a lot more sensible and you’re a lot more free to manipulate your computer if you run Linux (or to a lesser extent Android, which uses a heavily modified Linux kernel) on your personal computers; people who think that are not necessarily paranoid or trying to avoid being caught doing crimes like fraud and hacking databases over the internet from your computer, though an again smaller proportion of them might be (government intelligence agencies and other enthusiasts or server administrators generally use Linux for cybersecurity and networking).

Well modern CPU design requires quite a lot of physics I think

A lot of physics and chemistry, I’d say at least as far back as the early 80s. Using lasers to cut silicon at the nanometer scale is real magic

You could probably make a highly mechanical computer that does almost anything any other computer does (like run Linux) if you were super clever, but it would be huge and incredibly slow. At least you wouldn’t have to know too much about electrons though I guess.

Computer Engineer but ok

Computer Scientist but ok

Any study on a lower level than the mathematics of information processing is under the realm of computer engineering. Computer science is more of a mathematical discipline than anything else, Computer Engineering is more of the intersection of electrical engineering and computer science than anything else.

Computer scientists wouldn’t do any research about the electromagnetics of how a computer works, they just trust that the electromagnetic design of the hardware creates a valid Turing machine for them to design programs for.

Computer Magician but ok

Hates computers but ok

Computer lover but ok

pushes up glasses

Actually, that’s computer engineering.

Each level looks like something I’d expect an undergrad to have at least seen before to be honest.

This is a great watch: https://youtube.com/playlist?list=PLowKtXNTBypGqImE405J2565dvjafglHU&si=f-jWdjiFCkVjmgDH

Thank you for my next week at work.

What’s the physics behind the giant perky fur tits and how is the collar staying closed.

It’s magic.

estrogen

CuuuuUUUUte!

cUwUte