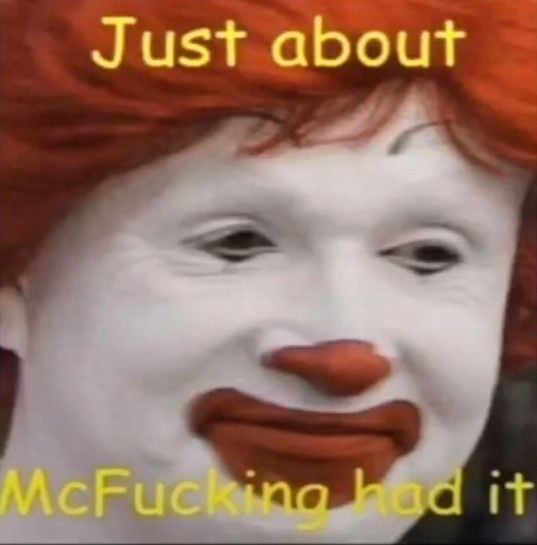

If you legitimately got this search result - please fucking reach out to your local suicide hot line and make them aware. Google needs to be absolutely sued into the fucking ground for mistakes like these that are

-

Google trying to make a teensy bit more money

-

Absolutely will push at least a few people over the line into committing suicide.

We must hold companies responsible for bullshit their AI produces.

This seems to not be real (yet) though.

Is this not real? I’ve done some Googling diligence and it’s been inconclusive - I’d really like to know as there are starry eyed sales people who keep pushing strong for integrating customer facing AI and I’ve been looking for a concrete example of it fucking up that’d leave us really liable. This and the “add glue to cheese” are both excellent examples that I haven’t been able to verify the veracity of.

This is from the account that spread the image originally: https://x.com/ai_for_success/status/1793987884032385097

Alternate Bluesky link with screencaps (must be logged in): https://bsky.app/profile/joshuajfriedman.com/post/3ktarh3vgde2b

Just so others do not need to click etc: they found out it was faked and apologize for spreading fake news.

Thank you, internet sleuth!

I’m not sure how you’d tell unless there is some reputable source that claims they saw this search result themselves, or you found it yourself. Making a fake is as easy as inspect element -> edit -> screenshot.

I gotchu on the cheese

My comment - relied on another user’s modified prompt to avoid Google’s incredibly hasty fix

Stupid actions in adding unsanitized AI output to search results are real, those very specific memetic searches leading to single Reddit comment seem to not be real

And another point to notice is I doubt any llm would say “one reddit user suggests”.

-

Be depressed

-

Want to commit suicide

-

Google it

-

Gets this result

-

Remembers comment

-

Sues

-

Gets thousands of dollars

-

Depression cured (maybe)

Lots of dead famous rich people show that money does not cure depression.

Not for everyone, but it would help a lot of people who have depression that was caused primarily by financial stress, working in a job/career that they aren’t passionate about, etc…

Money doesn’t buy happiness but it can help someone who is struggling to meet their basic needs not get stuck in a depressive state. Plus, it can be used in exchange for goods and services that show efficacy against depression.

What kind of goods and services?

Everyone’s brains are different. For some SSRIs might work. For others, SNRIs. While there are claims of cocaine and prostitutes being helpful for some, that’s not really scientifically proven and there the significant health and imprisonment risks. There is, however, strong evidence for certain psychedelics.

TL;DR - Drugs might be helpful for some.

The sibling comment said drugs which may be effective for some people but I’d actually just highlight “leisure” being able to afford to explore when your mind takes you is a luxury that pays off massively for your mental health. I have wanderlust and I’m a programmer, sometimes my legs want to move and, with my understanding boss, I can go out into the world and walk along the beaches or through the forest while I ponder problems… this is a huge boon for my mental health and is something most employees can’t afford due to monetary stresses and toxic employers.

well at least you’d be suicidal with money!

-

i pulled the image from a meme channel, so i dont know if its real or not, but at the same time, this below does look like a legit response

Leaving my chicken for 10 minutes near a window on a warm summer day and then digging in

It’s like sushi… kinda

So you can put raw chicken meat inside your armpit and it’s done? Sounds legit.

If you have a fever.

Slight fever.

deleted by creator

…does the chicken’s power level need to be over 9000 in order to be safe to eat?

Turns out AI is about as bad at verifying sources as Lemmy users.

I have read elsewhere that it was faked.

(Edit: meaning the original, with the golden gate bridge)

what are you whining about? Hallucination is inherently part of LLM as of today. Anything out of this should not be trusted with certainty. But employing it will have more benefits than just shadowing it for everyone. Take it as an unfinished project, so ignore the results if you like. Seriously, it’s physically possible to actually ignore the generative results.

“Absolutely sued” my ass

I absolutely agree and I consider LLM results to be “neat” but never trusted - if I think I should bake spaghetti squash at 350 I might ask an LLM and only find real advice if our suggested temperatures vary.

But some people have wholly bought into the “it’s a magic knowledge box” bullshit - you’ll see opinions here on lemmy that generative AI can make novel creations that indicate true creativity… you’ll see opinions from C-level folks that LLMs can replace CS wholesale who are chomping at the bit to downsize call centers. Companies need to be careful about deceiving these users and those that feed into the mysticism really need to be stopped.

you’ll see opinions here on lemmy that generative AI can make novel creations that indicate true creativity…

Yeah I’m not jumping that bandwagon yet, but I think no one is able to determine either side of that. It makes terrific art, so it’s not out the realm of impossibility. The only sensible stance we can take right now, is none, and just wait and see whether AI art can hold up in the long run.

Taking any serious stance right now a priori would be illogical. I don’t understand the fuss people make it out to be. Yes, artists will suffer financially and will therefore limit their time investment and advancements in art, but sacrificing or halting the development of AI for them is also not a possibility. So yes, artists are fucked right now, but there is nothing we can do about that right now. Hopefully, some UBI, but that’s not here now.

But yes, companies deceiving and not warning about the hallucinations of AI, is bad. But it’s not their fault people believe stupid shit because they have always believed and will always believe stupid shit.

It’s faked.

Should Reddit or quora be liable if Google used a link instead? Ai doesn’t need to work 100% of the time. It just needs to be better than what we are using.

What you’re focused on is actually the DMCA safe harbor provision.

If Reddit says, “We have a platform and some dumbass said to snort granulated sugar” it’s different from Google saying, “You should snort granulated sugar.”

That’s… not relevant to my point at all.

Make it apple employees in store and Microsoft forums. If humans give bad advice 10% of the time and Ai (or any technological replacement) makes mistakes 1% of the time, you can’t point to that 1% as a gotcha.

You’re shifting the goal posts though - prior to AI being an expert reference on the internet was expensive and dangerous, since you could potentially be held liable - as such a lot of topic areas simply lacked expert reference sources. Google has declared itself an expert reference in every topic utilizing Gemini - it isn’t, this will end badly for them.

-

Ah yes. Because that one Reddit users option holds equal weight to the thousands of professionals in the eyes of an LLM.

This is gonna get worse before it gets better.

I’d say it’s not the LLM at fault. The LLM is essentially an innocent. It’s the same as a four year old being told if they clap hard enough they’ll make thunder. It’s not the kids fault that they’re being fed bad information.

The parents (companies) should be more responsible about what they tell their kids (LLMS)

Edit. Disregard this though if I’ve completely misunderstood your comment.

I mean - I don’t think anyone’s solution to this issue would be to put an AI on trial… but it’d be extremely reasonable to hold Google responsible for any potential damages from this and I think it’d also be reasonable to go after the organization that trained this model if they marketed it as an end-user ready LLM.

Yeah that’s my point, too. AI employing companies should be held responsible for the stuff their AIs say. See how much they like their AI hype when they’re on the hook for it!

Sorry, I didn’t know we might be hurting the LLM’s feelings.

Seriously, why be an apologist for the software? There’s no effective difference between blaming the technology and blaming the companies who are using it uncritically. I could just as easily be an apologist for the company: not their fault they’re using software they were told would produce accurate information out of nonsense on the Internet.

Neither the tech nor the corps deploying it are blameless here. I’m well aware than an algorithm only does exactly what it’s told to do, but the people who made it are also lying to us about it.

Sorry, I didn’t know we might be hurting the LLM’s feelings.

You’re not going to. CS folks like to anthropomorphise computers and programs, doesn’t mean we think they have feelings.

And we’re not the only profession doing that, though it might be more obvious in our case. A civil engineer, when a bridge collapses, is also prone to say “is the cable at fault, or the anchor” without ascribing feelings to anything. What it is though is ascribing a sort of animist agency which comes natural to many people when wrapping their head around complex systems full of different things well, doing things.

The LLM is, indeed, not at fault. The LLM is a braindead cable anchor that some idiot put in a place where it’s bound to fail.

I’d say it’s more that parents (companies) should be more responsible about what they tell their kids (customers).

Because right now the companies have a new toy (AI) that they keep telling their customers can make thunder from clapping. But in reality the claps sometimes make thunder but are also likely to make farts. Occasionally some incredibly noxious ones too.

The toy might one day make earth-rumbling thunder reliably, but right now it can’t get close and saying otherwise is what’s irresponsible.

This is gonna get worse

before it gets better.Then it’ll get worse again.

i like how the answers are the exact same generic unhelpful drivel you hear 20k times a month if you’re depressed as well. real improvement there. when people google that they want immediate relief, not fucking oh go for a walk every day, no shit. the triviality of the suggestion makes the depression worse because you know it’s going to do nothing the first week besides make you feel sweaty and looked at and alone. like if i’m feeling recovered enough to go walk every day then i’m already feeling good enough that i don’t need to be googling about depression tips. this shit drives me insane.

when people google that they want immediate relief

Well, bad news as far as ‘immediate relief from depression’ goes.

Though I suppose there’s always ketamine.

Well, the Golden Gate suggestion is the immediate solution…

I mean, they put nets on it now. This advice is outdated. Stupid AI.

For some. Some of us would take at least a week to get there. Surely there must be a bridge on the east coast that works!

The ultimate question of philosophy…

“"Should I kill myself, or have a cup of coffee?”

-Camus

The trouble with ketamine is that once you reassociate shit’s back to where it was. It can alleviate symptoms and in very serious cases that might be called for but it’s definitely not a cure. Taking drugs to lower a fewer also alleviates symptoms, in serious cases will save lives, but it’s not going to get rid of the bug causing the fewer.

deleted by creator

i like how the answers are the exact same generic unhelpful drivel you hear 20k times a month if you’re depressed as well.

It makes sense though. It was trained on that drivel.

when people google that they want immediate relief, not fucking oh go for a walk every day,

The problem is that there is no immediate relief that isn’t either a) suicide, or b) won’t make things worse in the long run. Even something like ECT doesn’t work instantly; it takes several treatments. Transcranial magnetic stimulation seems promising, but it’s not a frontline treatment. The generic shit is the stuff that actually works in the long run, things like getting therapy, exercising, going outside more, interacting with people in a positive way, and so on. “Self care”–isolating and doing easy, comfortable things–will make things worse in the long run.

- Accept that your brain wants to do something different than what you had planned, thus

- Cancel all mid- to long-term appointments and

- Use the opportunity of not having that shit distracting you to reinforce good moment-to-moment habits. Like taking a walk today, because you can use the opportunity to buy fresh food today, to make a nice meal today, because that’s a good idea you can enjoy today while the back of your mind does its thing, which is not something you can do anything about in particular so stop worrying. And you probably don’t want to go shopping in pyjamas without taking a shower so that’s also dealt with. And with that,

- You have a way to set a minimum standard for yourself that will keep you away from an unproductive downward spiral and keep depression what it’s supposed to be, and that’s a fever to sweat out shitty ideas, concepts, and habits, none of which, let’s be honest, involve good food and a good shower. That’s not shitty shit you dislike.

The tl;dr is that depression doesn’t mean you need to suffer or anything. Unless you insist on clinging to the to be sweated out stuff, that is. The downregulating of vigour is global, yes, necessary to starve the BS, but if you don’t get your underwear in a twist over longer-term stuff your everyday might very well turn out to simply be laid back.

…OTOH yeah if this is your first time and you don’t have either a natural knack for it or the wherewithal to be spontaneously gullible enough to believe me, good luck.

Also clinical depression as in “my body just can’t produce the right neurotransmitters, physiologically” is a completely different beast. Also you might be depressive and not know it especially if you’re male because the usually quoted symptom set is female-typical.

You’ve laid out your personal depression cure to someone stating that reading about other people’s depression cures is incredibly frustrating when you’re actually depressed.

It’s great that you’ve found a plan that works for you, but don’t minimise everyone else’s suffering by proposing your own therapy.

In most cases the best thing you can do to help is to try to understand how someone is feeling.

You’ve laid out your personal depression cure to someone stating that reading about other people’s depression cures is incredibly frustrating when you’re actually depressed.

That’s not what the complaint was about. The complaint was about the generic drivel. The population-based “We observed 1000 patients and those that did these things got better” stuff that ignores why those people ended up doing those things, ignorance of the underlying dynamics which also conveniently fits a “pull yourself up by the bootstraps” narrative. The kind of stuff that ignores what people are going through. Ignores which agency exists, and which not.

Read what I wrote not as a plan “though shall get up at 6 and go on a brisk walk”, that’s BS and not what I wrote. Read it as an understanding of how things work dressed up as a plan. Going out and cooking food? Just an example, apply your own judgement of what’s good and proper for you moment to moment. You can read past the concrete examples, I believe in you.

In most cases the best thing you can do to help is to try to understand how someone is feeling.

The trick is to understand why you’re in that situation, what your grander self is doing, or at least trust it enough to ride along. Stop second-guessing the path you’re on and walk it, instead. You don’t really have a choice of path, but you do have a choice of footwear.

Or, differently put: What’s more important, understanding a feeling or where it’s coming from? Why it’s there? What it’s doing? What is its purpose? …what are the options? Knowing all this, many feelings will be more fleeting that you might think.

There’s an old Discorian parable, and actually read it it’s not the one you think it is:

I dreamed that I was walking down the beach with the Goddess. And I looked back and saw footprints in the sand.

But sometimes there were two pairs of footprints, and sometimes there was only one. And the times when there was only one pair of footprints, those were my times of greatest trouble.

So I asked the Goddess, “Why, in my greatest need, did you abandon me?”

She replied, “I never left you. Those were the times when we both hopped on one foot.”

And lo, I was really embarassed for bothering Her with such a stupid question.I mean this in the nicest possible way but you seem absolutely insufferable.

This is precisely the type of un-depress yourself advice that helps no one.

I seem to be speaking Klingon. I never told anyone to “un-depress” themselves. Quite the contrary, I’m talking about the necessity to accept that it’ll be the path you’re walking on for, potentially, quite a while. All I’m telling you is that that path doesn’t have to be miserable, or a downward spiral.

Make a distinction between these two scenarios: One, someone has a fever. They get told “stop having a fever, lower your temperature, then you’ll be fine”. Second, same kind of fever, they get told “Accept that you have a fever. Make sure to drink enough and to make yourself otherwise comfortable in the moment. Ignore the idiot with the ‘un-fever yourself’ talk”.

deleted by creator

The story at last was genuinely good.

again, this is all long term executive function that you are generally incapable of performing or even contemplating when depressed. maybe you can protestant-work-ethic yourself out of depression but that doesn’t mean everyone can. oh yeah lemme just keep being fucking harsh with myself, that’s the ticket.

what i want to hear is

- take a bath

- have chamomile tea, it binds to your GABA receptors

- go outside to breath the fresh air and look at the moon

- etc

simple, actionable things that don’t have barely-hidden contempt or disinterest behind them

I’m sorry what’s long-term executive function about cancelling your appointments? What’s harsh about it?

What about “take a bath” and “go outside to breathe” is less protestant-work-ethic than what I was saying?

The simple, actionable things are, precisely, the simple, actionable things. “Breathe in the fresh air” is not actionable when living in a city. “Sit on a bench and people-watch” is not actionable in the countryside. You know much better where you live, what simple things you could do right now. The point is not about the precise action, it’s about that it’s simple and actionable thus you should do it. Also, to a large degree, that it’s your idea, something you want.

i like how the answers are the exact same generic unhelpful drivel you hear 20k times a month if you’re…

Searching for a solution to any problem on the internet.

There are a million ad- laden sites that, in answer to a technical question about your PC, suggest that you run antivirus, system file checker, oh and then just format and reinstall your operating system. That is also 90 percent of the answers coming from “Microsoft volunteer support engineers” on Microsoft’s own support forums as well, just please like and upvote their answer if it helps you.

There are a million Instagram and tiktok videos showing obvious trivial, shitty, solutions to everyday problems as if they are revealing the secrets of the universe while they’re glueing bottle tops and scraps of car tires together to make a television remote holder.

There are a trillion posts on Reddit from trolls and shitheads just doing it for teh lulz and Google is happily slurping this entire torrent of shit down and trying to regurgitate it as advice with no human oversight.

I reckon their search business has about two years left at this rate before the general public regards them as a joke.

Edit: and the shittification of the internet has all been Google’s doing. The need for sites to get higher up in Google’s PageRank™ or be forever invisible has absolutely ruined it. The torrent of garbage now needed to ensure that various algorithms favour your content has fucked it for everyone. Good job, Google.

I feel like the user’s suggestion of “jumping off the Golden Gate Bridge” would be more impactful in that case, you know, to awaken your survival instincts, which prevents depression.

But on the off chance that someone actually goes and jumps off, a professional would probably not give that advice.TIL im alone when I go for a walk

Lemmy would’ve suggested bean diet

deleted by creator

You don’t have time to be depressed when you’re trying to fix xorg.conf. (yeah, I know, super dated reference, Linux is actually so good these days I can’t find an equivalent joke).

deleted by creator

You haven’t lived until you’ve compiled a 3com driver in order to get token ring connectivity so you can download the latest kernel source that has that new ethernet thing in it.

Editing grub.cfg from an emergency console, or running grub-update from a chroot is a close second.

Adding the right Modeline to xorg.conf seemed more like magic when it worked. 🧙🏼

You’ll also get knee-socks so win/win

deleted by creator

Idk, are you using arch? There seems to be a correlation from what I’ve gathered from Lemmy

deleted by creator

And you have a lot to (look forward to) do which might actually help

haha I really like Linux but that’s funny and somehow also true😂

Or not pooping for days

3 days of intensive treatment. That’ll sort ya

also suggesting that those beans could have been found in a nebula, dick

Feeling suicidal? No. Okay then try an all bean diet and see if it helps.

And installing Linux and axe-murdering anyone with a car.

At this point I can’t tell the joke ones from the real ones.

It’s all a joke

And now I’m thinking of the comedian from Watchmen. Alan Moore, knows the score…

simulAIcrum

Neither can ChatGPT

As comical as memey as this is, it does illustrate the massive flaw in AI today: it doesn’t actually understand context or what it’s talking about outside of a folder of info on the topic. It doesn’t know what a guitar is, so anything it recommends suffers from being sourced in a void, devoid of true meaning.

It’s called Chinese Room and it’s exactly what “AI” is. It recombines pieces of data into “answers” to a “question”, despite not understanding the question, the answer it gives, or the piece sit uses.

It has a very very complex chart of which elements in what combinations need to be in an answer for a question containing which elements in what combinations, but that’s all it does. It just sticks word barf together based on learned patterns with no understanding of words, language, context of meaning.

Yeah but the proof was about consciousness, and a really bad one IMO.

I mean we are probably not more advanced than computers, which would indicate that consciousness is needed to understand context which seems very shaky.

I think it’s kind of strange.

Between quantification and consciousness, we tend to dismiss consciousness because it can’t be quantified.

Why don’t we dismiss quantification because it can’t explain consciousness?

We can understand and poke on one but not the other I guess. I think so much more energy should be invested in understanding consciousness.

anything it recommends suffers from being sourced in a void, devoid of true meaning.

You just described most of reddit, anything Meta, and what most reviews are like.

It also doesn’t know what is true what is bs unless they learn from curated source. Truth need to be verified and backed by fact, if an AI learn from unverified or unverifiable source, it gonna repeat confidently what it learn from, just like an average redditor. That’s what make it dangerous, as all these millionaire/billionaire keep hyping up the tech as something it isn’t.

This image was faked. Check the post update.

Turns out that even for humans knowing what’s true or not on the Internet isn’t so simple.

Yes we know. We aren’t talking about the authenticity of the meme. We are talking about the fundamental problem with “AI”

You’re kind of missing the point. The problem doesn’t seem to be fundamental to just AI.

Much like how humans were so sure that theory of mind variations with transparent boxes ending up wrong was an ‘AI’ problem until researchers finally gave those problems to humans and half got them wrong too.

We saw something similar with vision models years ago when the models finally got representative enough they were able to successfully model and predict unknown optical illusions in humans too.

One of the issues with AI is the regression to the mean from the training data and the limited effectiveness of fine tuning to bias it, so whenever you see a behavior in AI that’s also present in the training set, it becomes more amorphous just how much of the problem is inherent to the architecture of the network and how much is poor isolation from the samples exhibiting those issues in the training data.

There’s an entire sub dedicated to “ate the onion” for example. For a model trained on social media data, it’s going to include plenty of examples of people treating the onion as an authoritative source and reacting to it. So when Gemini cites the Onion in a search summary, is it the network architecture doing something uniquely ‘AI’ or is it the model extending behaviors present in the training data?

While there are mechanical reasons confabulations occur, there are also data reasons which arise from human deficiencies as well.

The other massive flaw it demonstrates in AI today is it’s popular to dunk on it so people make up lies like this meme and the internet laps them up.

Not saying AI search isn’t rubbish, but I understand this one is faked, and the tweeter who shared it issued an apology. And perhaps the glue one too.

There are cases of AI using NotTheOnion as a source for its answer.

It doesn’t understand context. That’s not to say I am saying it’s completely useless, hell I’m a software developer and our company uses CoPilot in Visual Studio Professional and it’s amazing.

People can criticise the flaws in it, without people doing it because it’s popular to dunk on it. Don’t shill for AI and actually take a critical approach to its pros and cons.

I think people do love to dunk on it. It’s the fashion, and it’s normal human behaviour to take something popular - especially popular with people you don’t like (e.g. j this case tech companies) - and call it stupid. Makes you feel superior and better.

There are definitely documented cases of LLM stupidity: I enjoyed one linked from a comment, where Meta’s(?) LLM trained specifically off academic papers was happy to report on the largest nuclear reactor made of cheese.

But any ‘news’ dumping on AI is popular at the moment, and fake criticism not only makes it harder to see a true picture of how good/bad the technology is doing now, but also muddies the water for people believing criticism later - maybe even helping the shills.

Does anyone really know what a guitar is, completely? Like, I don’t know how they’re made, in detail, or what makes them sound good. I know saws and wide-bandwidth harmonics are respectively involved, but ChatGPT does too.

When it comes to AI, bold philosophical claims about knowledge stated as fact are kind of a pet peeve of mine.

It sounds like you could do with reading up on LLMs in order to know the difference between what it does and what you’re discussing.

Dude, I could implement a Transformer from memory. I know what I’m talking about.

You’re the one who made this philosophical.

I don’t need to know the details of engine timing, displacement, and mechanical linkages to look at a Honda civic and say “that’s a car, people use them to get from one place to another. They can be expensive to maintain and fuel, but in my country are basically required due to poor urban planning and no public transportation”

ChatGPT doesn’t know any of that about the car. All it “knows” is that when humans talked about cars, they brought up things like wheels, motors or engines, and transporting people. So when it generates its reply, those words are picked because they strongly associate with the word car in its training data.

All ChatGPT is, is really fancy predictive text. You feed it an input and it generates an output that will sound like something a human would write based on the prompt. It has no awareness of the topics it’s talking about. It has no capacity to think or ponder the questions you ask it. It’s a fancy lightbulb, instead of light, it outputs words. You flick the switch, words come out, you walk away, and it just sits there waiting for the next person to flick the switch.

No man, what you’re saying is fundamentally philosophical. You didn’t say anything about the Chinese room or epistemology, but those are the things you’re implicitly talking about.

You might as well say humans are fancy predictive muscle movement. Sight, sound and touch come in, movement comes out, tuned by natural selection. You’d have about as much of a scientific leg to stand on. I mean, it’s not wrong, but it is one opinion on the nature of knowledge and consciousness among many.

I didn’t bring up Chinese rooms because it doesn’t matter.

We know how chatGPT works on the inside. It’s not a Chinese room. Attributing intent or understanding is anthropomorphizing a machine.

You can make a basic robot that turns on its wheels when a light sensor detects a certain amount of light. The robot will look like it flees when you shine a light at it. But it does not have any capacity to know what light is or why it should flee light. It will have behavior nearly identical to a cockroach, but have no reason for acting like a cockroach.

A cockroach can adapt its behavior based on its environment, the hypothetical robot can not.

ChatGPT is much like this robot, it has no capacity to adapt in real time or learn.

Feels reminiscent of stealing an Aboriginal, dressing them in formal attire then laughing derisively when the ‘savage’ can’t gracefully handle a fork. What is a brain, if not a computer?

Yeah, that’s spicier wording than I’d prefer, but there is a sense they’d never apply these high measures of understanding to another biological creature.

I wouldn’t mind considering the viewpoint, on it’s own, but they put it like it’s an empirical fact rather than a (very controversial) interpretation.

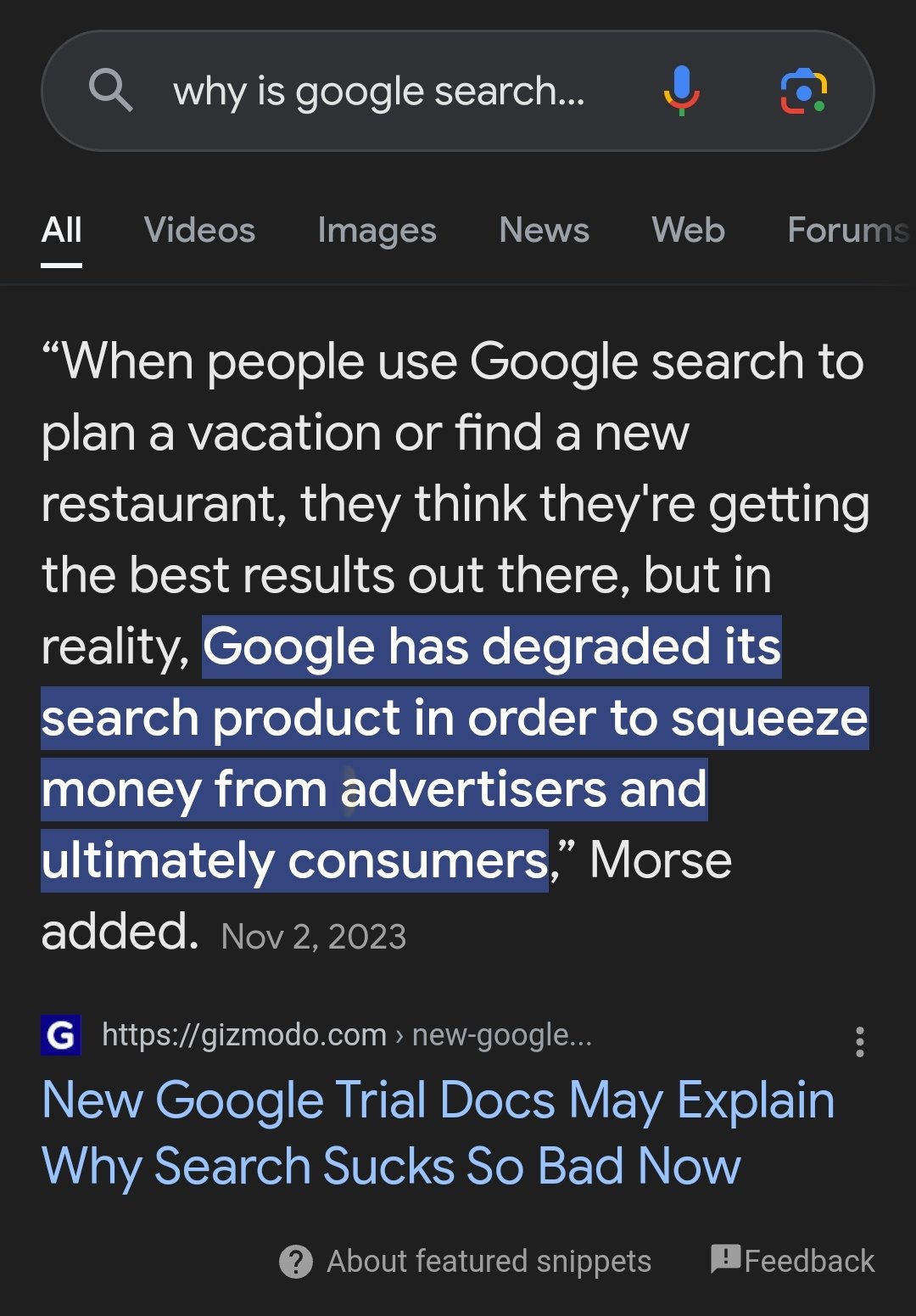

I just asked Google why it’s search is complete shit now? At least it isn’t being biased, lol 🤪

Thas not the AI though, thats a snippet from an article about how google search is shit now

Yeah, when Google starts trying to manipulate the meaning of results in it’s favour, instead of just traffic, things will be at a whole other level of scary.

How do you stop people from jumping off the golden gate bridge? use glue! - Google, probably

People will die of hunger in the glue trap, but they’re not jumping!

Nah, it would say that glue is only useful for pizzas.

Simply turn yourself into cheese and the bridge into pizza, and then the glue will work perfectly!

deleted by creator

Can we stop calling these glorified chat bots “AI” now?

Corps sure can’t

These chatbots are AI - They tailor responses over time so long as previous messages are in memory, showing a limited level of learning

The issue is these chatbots either:

A) Get so little memory that they effectively don’t even have short term memory, or

B) Are put in situations where that chat memory learning feature is moot

They are AI, they are just stupidly simple and inept AI that barely qualify

They have no memory actually. They are completely static. When you chat with them, every single previous prompt and response from that session is fed back through as if it were one large single prompt. They are just faking it behind a chat-like user interface. They most definitely do not learn anything after training is complete.

Their brain is a neural-net processor, a learning computer, but Skynet sets the switch to read-only when they’re sent out alone.

… No. They’re instanced so that when a new person interacts with them, they don’t have the memories of interacting with the person before them. A clean slate, using only the training data in the form the developers want it to. It’s still AI, it’s just not your girlfriend. The fact you don’t realize that they do and can learn after their training data proves people just hate what they don’t understand. I get it, most people don’t even know the difference between a neural network and AI because who has the time for that? But if you just sit here and go “nuh uh they’re faking it” rather than push people and yourself to learn more, I invite you, cordially, to shut the fuck up.

Dipshits giving their opinions as fact is a scourge with no cure.

I love how confidently wrong you are!

About which part? The part that they can remember and expand their training data to new interactions but often become corrupted by them so much so that the original intent behind the AI is irreversibly altered? That’s been around for about a decade. How about the fact they’re “not faking it” because the added capacity to compute and generate the new content has to have sophisticated plans just to continue running in a timely manner?

I’d love to know which part you took issue with but you seemingly took my advice to shut the fuck up and I do profoundly appreciate it.

That’s a completely different kind of AI. This story, and all the discussion up to this point, has been about the LLM based AIs being employed by Google search and ChatGPT.

And I explained to you that these models aren’t incapable of learning, they’re given artificial restrictions not to in order to prevent what I linked from happening. They don’t learn to preserve the initial experience but are the exact same kind of AI. Generative.

The thing these AI goons need to realize is that we don’t need a robot that can magically summarize everything it reads. We need a robot that can magically read everything, sort out the garbage, and summarize the the good parts.

Good luck ever defining “good”.

good = whatever increases the stocks value

/j

No joke there, you’re completely correct.

The thing is that that was how Google became so big in the first place. PageRank was a cool way of trying to filter out the garbage and it worked real well. Even my non techy friends have been getting frustrated with search not working like it used to (even before all this Gemini stuff was added)

suicAId

SuicAid, the solution to your problems with suicide.

One reddit user suggests LOREM IPSUM DOLOR SIT AMET

I can’t wait to see my old horny comments about anime girls come up.

I just checked and they actually disabled AI Overview. LMAO

Is this real?

Seems not, as several people failed to reproduce it. At least, not yet real

I’m not sure because google.com requires JavaScript and then when I enable it it says it’s had too much malicious behavior from my VPN address and to enable another host gstatic to connect to I’m guessing for a rechaptcha and I gave up

Guess all those SEO pranks came back to bite you in the ass, huh?

Nope, but there’s a whole thread of people talking about how LLMs can’t tell what’s true or not because they think it is, which is deliciously ironic.

It seems like figuring out what’s bullshit on the Internet is an everyone problem.