Either self-hosted or cloud, I assume many of you keep a server around for personal things. And I’m curious about the cool stuff you’ve got running on your personal servers.

What services do you host? Any unique stuff? Do you interact with it through ssh, termux, web server?

This might be a better question for !selfhosted

Lenovo ThinkStation P330 Tiny. Debian + Podman systemd quadlets, running these services:

- Jellyfin

- Sonarr

- Radarr

- Qbittorrent w/ VPN

- Linkwarden

- Calibre Web

- Immich

- Lidare

- Postgres

- Prowlarr

- Vaultwarden

P330 tiny is so good I just wish there was a ryzen version with a pcie slot. Quicksync is great but I hate Intel.

Do you have any tips (or examples) using quadlets? I tried using them but I couldn’t wrap my head around them.

I used this guide https://www.redhat.com/sysadmin/quadlet-podman

I have a folder on my in my home folder called

containerssymlinked to/etc/containers/systemdwith my .container files. This is my jellyfin.container for using the Nvidia Quadro on my server.[Unit] Description=Podman - Jellyfin Wants=network-online.target After=network-online.target Requires=nvidia-ctk-generate.service After=nvidia-ctk-generate.service [Container] Image=lscr.io/linuxserver/jellyfin:latest AutoUpdate=registry ContainerName=jellyfin Environment=PUID=1000 Environment=PGID=100 Environment=TZ=America/St_Johns Environment=DOCKER_MODS=ghcr.io/gilbn/theme.park:jellyfin Environment=TP_THEME=dracula Volume=/home/eric/services/jellyfin:/config Volume=/home/eric/movies:/movies Volume=/home/eric/tv:/tv Volume=/home/eric/music:/music PublishPort=8096:8096 PublishPort=8920:8920 PublishPort=7359:7359/udp PublishPort=1900:1900/udp AddDevice=nvidia.com/gpu=all SecurityLabelDisable=true [Service] Restart=always TimeoutStartSec=900 [Install] WantedBy=default.targetI use

sudo podman auto-updateto update the images to utilize theAutoUpdate=registryoption.

Two old HP thin client PCs configured as 4TB SFTP file servers using vsftpd on Debian. Each one uses software RAID 1 with both an NVMe and SATA SSD internally, and are in two separate locations with a cron job which syncs one to the other every 24 hours.

People who actually know what they are doing will probably find this silly, but I had fun and learned a lot setting it up.

If it works reliably who cares?

tell me about the cron thing. im thinking of doing just that on mine for backup.

are you scping them together?

I am using lftp and mirror. One server functions as the “main” server, which mirrors the backup server to itself once per day at a specific time (they both run 24/7 so I set it to run very early in the morning when it is unlikely to be accessed).

In my crontab I have:

# * * * /usr/bin/lftp -e "mirror -eRv [folder path on main server] [folder path on backup server]; quit;" sftp://[user]@[address of backup server]:[port number]til about lftp. i’m gonna be testing that one out thanks

No problem! Glad I could be of help, and best of luck on your project.

You might like to search this community, and also \c\self_hosted, since this question gets asked a lot.

For me:

- Audiobookshelf

- Navidrome

- FreshRss

- Jellyfin

- Forgejo

- Memos

- Planka

- File Storage

- Immich

- Pihole

- Syncthing

- Dockge

I created two things - CodeNotes (for snippets) and a lil’ Weather app myself 'cause I didn’t like what I found out there.

how do you like freshrss? do you use it on mobile too?

I love it. I do use it on mobile, in my browser too. I’ve been meaning too see what other clients are available for android.

On my Raspberry Pi 4 4gb with encrypted sd is:

- pihole

- wireguard server

- vaultwarden

- cloudflare ddns

- nginx proxy manager

- my website

- ntfy server

- mollysocket

- findmydevice server

- watchtower

Pi is overkill for this kind of job. Load average is only 0.7% and ram usage is only 400M

findmydevice server

What server are you running for this?

can you tell us how you got this running with an encrypted SD card?

That was really hard to do. I created a note for myself and I will also publish it on my website. You can also decrypt the sd using fido2 hardware key (I have a nitrokey). If you don’t need that just skip steps that are for fido2.

The note:

Download the image.

Format SD card to new DOS table:

- Boot: 512M 0c W95 FAT32 (LBA)

- Root: 83 Linux

As root:

xz -d 2023-12-11-raspios-bookworm-arm64-lite.img.xz losetup -fP 2023-12-11-raspios-bookworm-arm64-lite.img dd if=/dev/loop0p1 of=/dev/mmcblk0p1 bs=1M cryptsetup luksFormat --type=luks2 --cipher=xchacha20,aes-adiantum-plain64 /dev/mmcblk0p2 systemd-cryptenroll --fido2-device=auto /dev/mmcblk0p2 cryptsetup open /dev/mmcblk0p2 root dd if=/dev/loop0p2 of=/dev/mapper/root bs=1M e2fsck -f /dev/mapper/root resize2fs -f /dev/mapper/root mount /dev/mapper/root /mnt mount /dev/mmcblk0p1 /mnt/boot/firmware arch-chroot /mntIn chroot:

apt update && apt full-upgrade -y && apt autoremove -y && apt install cryptsetup-initramfs fido2-tools jq debhelper git vim -y git clone https://github.com/bertogg/fido2luks && cd fido2luks fakeroot debian/rules binary && sudo apt install ../fido2luks*.deb cd .. && rm -rf fido2luks*Edit

/etc/crypttab:root /dev/mmcblk0p2 none luks,keyscript=/lib/fido2luks/keyscript.shEdit

/etc/fstab:/dev/mmcblk0p1 /boot/firmware vfat defaults 0 2 /dev/mapper/root / ext4 defaults,noatime 0 1Change

rootto/dev/mapper/rootand addcryptdevice=/dev/mmcblk0p2:rootto/boot/firmware/cmdline.txt.PATH="$PATH:/sbin" update-initramfs -uExit chroot and finish!

umount -R /mntThank you so much! will make a note of this

No problem ;)

I have an orangepi zero 3 with pihole

Then an ITX PC with

-

mealie (meal planner, recipe parser, grocery list maker with a bunch of features and tools)

-

immich for self hosting a google photos alternative

-

*arr stack for torrenting Linux ISOs

-

Jellyfin for LAN media playing

-

home assistant for my VW car, our main hanging renovation lights, smoke and CO monitors, and in the future, all of the KNX smart systems in our house

-

Syncthing for syncing photo backup and music library with phone

-

Bookstack for a wiki, todos, journal, etc… (Because I didn’t want to install better services for journals when I don’t use it much)

-

paperless-ngx for documents

-

leantime for managing my personal projects, tasks, and timing

-

Valheim game server

-

Calibre-web for my eBook library backup

-

I had nextcloud but it completely broke on an update and I can’t even see the login fields anymore, it just loads forever until it takes down my network and server, so I ditched it since I never used it anyway

-

crowdsec for much better (preemptive) security than fail2ban

-

traefik for reverse proxy

As a person that actually torrented a Linux iso on Friday, thank you! Lol

-

countless “read later” pdfs …and cat pictures

Cat pictures ? Definitely the best possible use of a server 😄

deleted by creator

Have you integrated your matrix instance with keycloak? Ive been wanting to set it up to allow local matrix users the ability to SSO with other stuff like jellyfin with just their matrix ID.

deleted by creator

Minetest server, arr suite, plex, Pihole, calibre, homesssistant, Nextcloud.

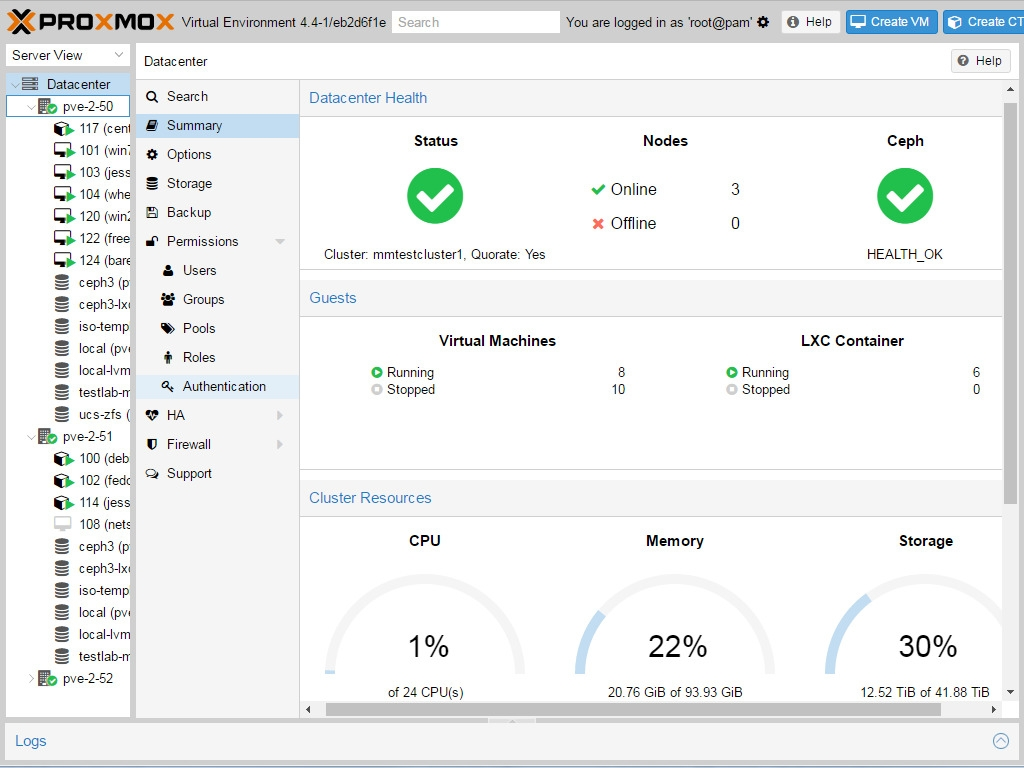

Interact with it through a Homarr webpage and all of it is virtualized through proxmox.

I’ve been a software engineer for 8 years and I’ve had my own Jellyfin server (and before that, Plex) set up for 4 years on a server that I built myself.

Despite this, I don’t have a damn clue what “virtualized through Proxmox” means any time I read it.

They are just running things in VMs. They may even have a cluster with some sort of high availability.

Or containers, but lxc instead of docker-like. They’re like full VMs in operation but super lightweight. Perfect for some needs.

I personally find that LXC really isn’t better than a VM.

U crazy! lxc is incredibly lightweight compared to a vm, I’m often amazed at what it can do with just a few hundred MB of memory.

Also you can map storage straight from the host and increase allocation instantly, if needed. Snapshotting and replication are faster too.

I’m always bummed when I’m forced to run a VM, they seem archaic vs PVE CTs. Obviously there are still things VMs are required for, though.

Proxmox is a hypervisor, like VMware. They are just running containers and / or VMs. Procmox is the management interface.

You use Homarr with Proxmox? I should look into that.

Ya I have it running as an LXC. Here’s a script you can run in your proxmox shell that will create it for you:

bash -c “$(wget -qLO - https://github.com/tteck/Proxmox/raw/main/ct/homarr.sh)”

Plex, transmission, home assistant, some SSH tunnels, some custom home automation endpoints.

ATM I have the following running:

- Caddy

- NextCloud

- Webpress

- Plex

- Actual Budget

- Portainer

- Vaultwarden

- Grafana

- Stable Diffusion

- QBT

- *arr stack

- 4 Debian instances with differing bits and bobs on

- MIT Scratch

- Neon KDE (Drives lounge TV)

- Win10 and 11 vms

- TrueNAS

- OpnSense

- Homepage

- Navidrome

- SoulSeek

Curious about the specs of your machine.

it’s an i5 13xxx with 64GB ram and a HBA passed through to TrueNAS with 7 disks on it and a second network card passed through to OpnSense for WAN/LAN

All the above runs in Proxmox and has a bit of room for expansion still ;) This was a 50th to myself to replace an IBM M4 space heater

NUC 8i5, 32GB, 500GB NVMe (host), 8TB SSD (data), Akasa Turing fanless case, running Proxmox:

- samba

- syncthing

- pihole

- radicale

- jellyfin

- minidnla

I also have a Pi 4 running LibreElec for Kodi on the home theater. Nothing fancy yet and it more than meets our current needs. Most maintenance done over SSH.

Would like to eventually get a proper web and email server going (yes, I know).

I use Docker and (currently) VMware and host whatever I need for as long (or short) as I need it.

This allows me to keep everything separate and isolated and prevents incompatible stuff interacting with each other. In addition, after I’m done with a test, I can dispose of the experiment without needing to track down spurious files or impacting another project.

I also use this to run desktop software by only giving a container access to the specific files I want it to access.

I’m in the process of moving this to AWS, so I have less hardware in my office whilst gaining more flexibility and accessibility from alternative locations.

The ultimate aim is a minimal laptop with a terminal and a browser to access what I need from wherever I am.

One side effect of this will be the opportunity to make some of my stuff public if I want to without needing to start from scratch, just updating permissions will achieve that.

One step at a time :)

- HomeAssistant and a bunch of scripts and helpers.

- A number of websites, some that I agreed to host for someone who was dying.

- Jellyfin and a bunch of media

- A lot of docker containers (Adguard, *arrs)

- Zoneminder

- Some routing and failover to provide this between main main server and a much smaller secondary (keepalived, haproxy, some of the docker containers)

- Some development environments for my own stuff.

- A personal diary that I wrote and keep track of personal stats for 15 years

- Backup server for a couple of laptops and a desktop (plus automated backup archiving)

Main server is a ML110 G9 running Debian. 48G/ram. 256 ssd x2 in raid1 as root. 4tb backup drive. 4tb cctv drive. 4x4tb raid 10 data drive. (Separating cctv and backup to separate drives lowers overall iowait a lot). 2nd server is a baby thinkcentre. 2gb ram, 1x 128gb ssd.

Edit: Also traccar, tracking family phones. Really nice bit of software and entirely free and private. Replaced Life360 who have a dubious privacy history.

Edit2: Syncthing - a recent addition to replace GDrive. Bunch of files shared between various desktops/laptops and phones.

Just Jellyfin and modded Minecraft right now. Nothing super interesting, but great fun.

I’m using SSH to interact with the Minecraft server in tmux, and the web interface for Jellyfin.