AI absolutely has the potential to enable great things that people want, but that’s completely outweighed by the way companies are developing them just for profit and for eliminating jobs

Capitalism can ruin anything, but that doesn’t make the things it ruins intrinsically bad

I used to be so excited about tech announcements. Like…I should be pumped for ai stuff. Now I immediately start thinking about how they are going to use the thing to turn a profit by screwing us over. Can they harvest data with it? Can they charge a subscription for that? I’m getting so jaded.

this but tech in general.

on the modern internet

when you search for knowledge or try to connect with others on social media or consume content as a form of escapism from this hellhole what do you get?

you get fed ads, sponsored posts, scams, algorithmically curated content to optimize your engagement, and now also AI drivel that’s inaccurate and sometimes dangerous.even off the internet you cannot escape it. there are cameras, microphones, and other sensors everywhere tracking your every movement to anticipate your thoughts and desires to try to sell you something or even manipulate the costs of what you’re already planning to buy to optimize profit.

i feel that the modern technological landscape is slowly driving me, a once tech supporter and enthusiast, toward some sort of neo-luddism to get away from it all.

and what’s it all boil down to?

money.

it’s always about the money.

AI absolutely has the potential to enable great things that people want

The current implementation of very large data sets obtained through web scrapping, very aggressive marketing of these services such that the results pollute existing online data sets, and the refusal to tag AI generated content as such in order to make filtering it out virtually impossible is not going to enable great things.

This is just spam with the dial turned up to 11.

Capitalism can ruin anything

There’s definitely an argument that privatization and profit motive have driven the enormous amounts of waste and digital filth being churned out at high speeds. But I might go farther and say there are very specific bad actors - people who are going above simply seeking to profiteer and genuinely have mega-maniacal delusions of AGI ruling the world - who are rushing this shit out in as clumsy and haphazard a manner as possible. We’ve also got people using AI as a culpability shield (Israel’s got Lavendar, I’m sure the US and China and a few other big military superpowers have their equivalents).

This isn’t just capitalism seeking ever-higher rents. Its delusional autocrats believing they can leverage sophisticated algorithms to dictate the life and death of billions of their peers.

These are ticking time bombs. They aren’t well regulated or particularly well-understood. They aren’t “intelligent”, either. Just exceptionally complex. That complexity is both what makes them beguiling and dangerous.

I was gonna say, of all the things to be upset about AI, not enabling things that I want isn’t one of them. I use it all the time and find it incredibly useful for boring tasks I don’t care about doing myself. Just today I had to write some repetitive unit tests and it saved me a bunch of time writing syntax so I could focus on the logic. It sounds like OOP either hasn’t used it much or doesn’t have a use for it.

I think the problem is that for consumers, it most often being used for generating spammy ad revenue sites with plagiarized rewritten content. Or the ads themselves in the case of image generation.

I’m glad it’s working for writing unit tests, though it would seem that better build/debug systems could be designed to eliminate repetitive coding like that by now. But I quit web dev about 5 years ago, and don’t intend to go back.

that doesn’t make the things it ruins intrinsically bad

Hmmm tricky, see for example https://thenewinquiry.com/super-position/ where capitalism is very good at transforming everything and anything, including culture in this example, to preserve itself while making more money for the few. It might not indeed change good things to bad once they already exist, but it can gradually change good things to new bad things while attempting to make them look like the good old ones it replaces.

Electricity lead to more profits and eliminated jobs. Fuck capitalism.

jobs existing is the problem with capitalism, people shouldn’t need to work to live.

Corporate is pushing AI. It’s laughably bad. They showed off this automated test writing platform from Meta. That utility, out of 100 runs, had a success rate of 25%. And they were touting how great it was. Entirely embarrassing.

I’m so tired of these tech-bros trying to convince everyone that we need AI

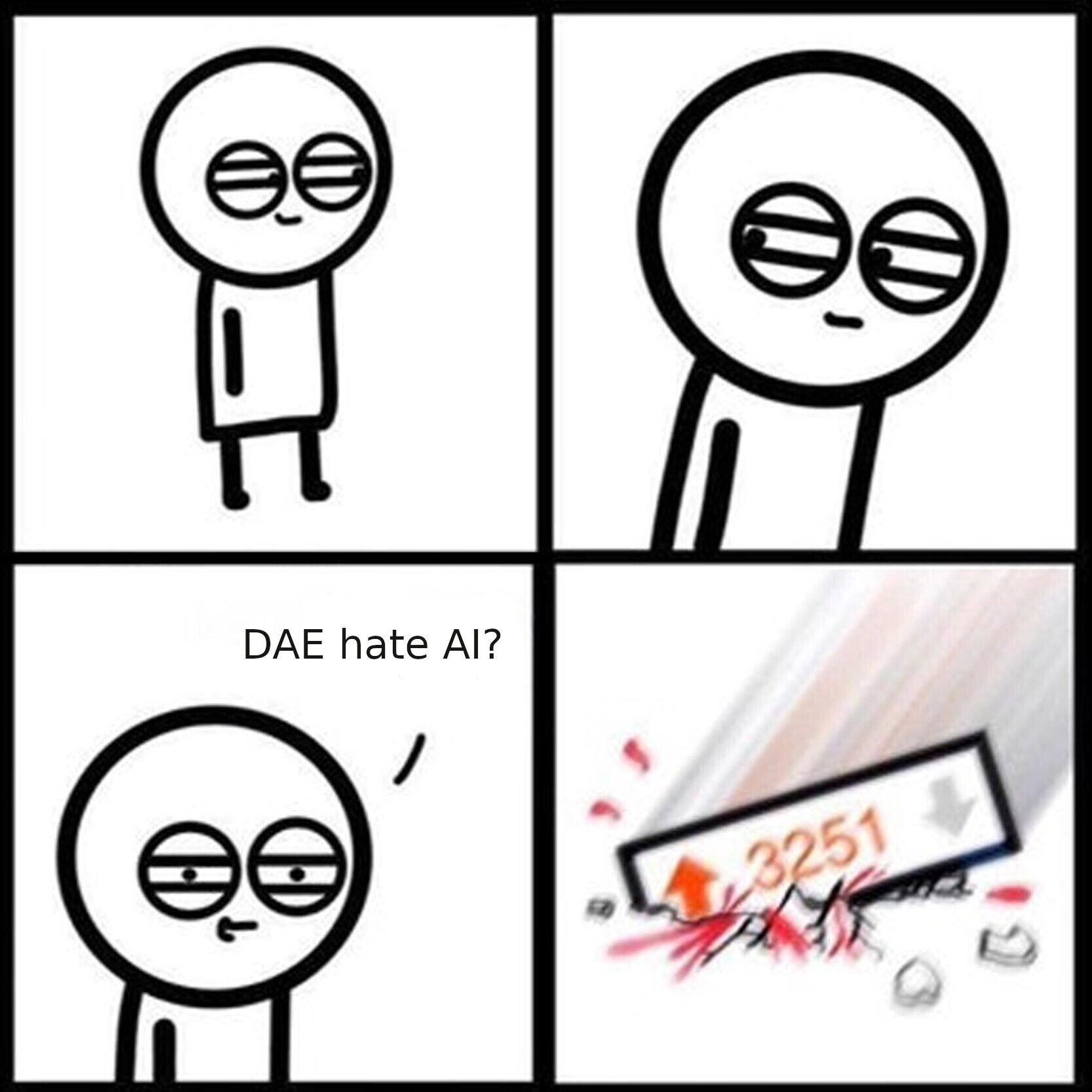

People leaving pro-AI comments in !fuck_ai@lemmy.world lmao

I thought echo chambers were a bad thing?

I also thought echo chambers were a bad thing.

Yeah right ?! glad we agree on this. Damn, we should hang out more often

As with anything, hating on AI is a spectrum. It’s a love-hate relationship for me.

People said the same things about the Internet when it came out and calculators before that

Calcgpt.io combines all 3 to demonstrate how right all of these detractors are.

“When I was young, they told me that AI would do the menial labor so that we could spend more time doing the things we love, like making music, painting, and writing poetry. Today, the AI makes music, paints pictures, and writes poetry so that I can work longer hours at my menial labor job.”

AI bros are like pro-lifers, straw-manning an argument nobody is making.

I didn’t use it for those things but I do use it every day for a multitude of tasks. For myself I use it far more than Google itself.

But these are the things people are complaining about - not AI itself, but the people making it, the reasons that they’re making it, and the consequences that that is having on the human condition.

For your example, people don’t complain about it making Google obsolete or something, but about the fact that LLMs like ChatGPT are wrong about 53% of the time and often completely make stuff up, and that the companies making and pushing them as a replacement for search engines have collectively shrugged their shoulders and literally said “there’s nothing we can do to prevent it” when asked about these “hallucinations” as they call them.

Which is absolutely hilarious to me given how often it hallucinates answers.

I recently tried ChatGPT as a Google alternative. It looked very impressive as it found things based on the slightest of clues. Except that literally everything it found was made up. It “found” a movie quote by Orson Welles that was never quoted. It “found” a song by an artist that said artist never released. It “found” an album by a group released four years before said group’s first release.

If you’re using ChatGPT as a Google replacement you are being dangerously misinformed by a degenerative “AI” that speaks with the certitude of a techbrodude.

Just ask it to provide links to back up it’s answers

Or, you know, I could just search for the links.

All ChatGPT adds to the equation now is burning down a rainforest or ten.

Or possibly it will help us solve the energy issues. It’s already lead to some revolutionary discoveries in propulsion and many other areas. It really is far more than something to chat with and to think otherwise is just silly. I use it to to code, brainstorm and developer with. Al systems can process massive amounts of data, recognizing patterns and improving their performance over time. This has helped things like the medical field immensely. Especially with early detection of cancers etc. Trying to be a luddites won’t stop it. Might as well embrace and extend it for the betterment of all.

for generating filler text and in general making things more formal it can do a good job, yes you do need to review it’s output, but that beats needing to write the whole thing in a less hostile tone.

I guess I was the sucker that just learned to write.

Then it’s likely you leave the carbon footprint of an 18-wheeler

Oh yeah. Probably more.

The world is a wildly different place now, and the people developing them were headed by people motivated by reasons other than extracting as much money out of the world at any cost.

This is not nearly as comparable.

Beyond that, very few people had an issue with AI as fuzzy logic and machine learning. Those techniques were already in wide use all over the place to great success.

The term has been co-opted by the generative, largely LLM folks to oversell the product they are offering as having some form of intelligence. They then pivot to marketing it as a solution to the problem of having to pay people to talk, write, or create visual or audio media.

Generally, people aren’t against using AI to simulate countless permutations of motorcycle frame designs to help discover the most optimal one. They’re against wholesale reduction in soft skill and art/content creation jobs by replacing people with tools that are definitively not fit to task.

Pushback against non-generative AI, such as self-driving cars, is general fatigue at being sold something not fit to task and being told that calling it out is being against a hypothetical future.

the people developing them were headed by people motivated by reasons other than extracting as much money out of the world at any cost.

I mean… they were developing an information tool that could survive a nuclear strike. One of the ironies of the modern internet is how its become so heavily centralized and interdependent that it no longer fulfills any of the original functions of the system.

Pushback against non-generative AI, such as self-driving cars, is general fatigue at being sold something not fit to task and being told that calling it out is being against a hypothetical future.

One could argue the same of the original internet. The Web1 tools were largely decentralized and difficult to navigate, but robust and resilient in the face of regional outages. Web2 went the opposite direction, engaging in heavy centralization under a handful of mega-firms and their Walled Garden of services. The promise of Web3 was supposed to be a return to fully decentralized network, but it just ended up being even more boutique fee-for-service Walled Gardens.

Modern internet is horribly expensive, inefficient, and vulnerable to outages at an international scale. Convenience has become obligation (always-on DRM, endless system updates, tighter and tighter obsolescence timelines). Interface has become surveillance (everything with a mic or a camera is used to spy on us). Communication has become commodity (constant data scraping, compiling, and trading of human interactions).

AI is all this on steroids.

Friend Computer, I just want you to know that I actually love my 24/7/365 integrated surveillance state. The internet is an unmitigated good and anyone who says otherwise should be flagged as such and disposed of.

Yeah and people also said the same thing about NFTs and now they barely exist. If there was a use for AI outside of very specific things I’d agree with you. But the uses for AI are very basic when comparing it to the Internet.

Nobody said that about nfts. Maybe s couple of foolish kids and shysters but nobody ever took them seriously.

Maybe later on, but in the beginning everyone was hyping NFTs and companies were trying thousands of different ways to put NFTs onto their platform. The only difference is what side you are on.

I’m not saying NFTs are like AI. AI has actual potential. I’m just saying lot of technologies are more hype than substance. My life has changed zero percent since AI came out. If anything it’s been more annoying and made things like Internet searches more frustrating. And when I saw that new ad for Google Gemini as they vaguely tell you what it can even do or be used for, the same thought came to my mind, “Yeah, but what is it even for? Do you guys even know?”

It’s for whatever you want to use it for. Personally I use it continuously. Googling and just getting a bunch of links to shift through is a waste of time and money.

Mom says it’s my turn to repost this tomorrow.

deleted by creator

I mean… Shouldn’t we start differentiating generative AI from stuff that’s actually useful like computer vision?

My head cannon is that AI = LLMS and machine learning = actually useful “artificial intelligence”

That’s the way most discussion trends right now. Blame the tech bros and investors chasing a buck for killing the term AI.

Apple Intelligence*

Maybe we can start referring to AI devices as Genius Bars.

Absolutely! I hate cool and useful shit like OCR (optical character recognition for anyone who doesn’t know) getting mixed with

content theftgenerative AIThat would be nice. If you’re talking about generative AI, then call it “generative AI” instead of simply “AI”.

It’s why only open source ai should exist

The AI has a huge beneficial for drug development however. Iirc AI has synthesised a stronger version of one of the antibiotics.

This is really more about what the public considers AI- generative AI bullshit.

Ethical

AI tools aren’t inherently unethical, and even the ones that use models with data provenance concerns (e.g., a tool that uses Stable Diffusion models) aren’t any less ethical than many other things that we never think twice about. They certainly aren’t any less ethical than tools that use Google services (Google Analytics, Firebase, etc).

There are ethical concerns with many AI tools and with the creation of AI models. More importantly, there are ethical concerns with certain uses of AI tools. For example, I think that it is unethical for a company to reduce the number of artists they hire / commission because of AI. It’s unethical to create nonconsensual deepfakes, whether for pornography, propaganda, or fraud.

Environmentally sustainable

At least people are making efforts to improve sustainability. https://hbr.org/2024/07/the-uneven-distribution-of-ais-environmental-impacts

That said, while AI does have energy a lot of the comments I’ve read about AI’s energy usage are flat out wrong.

Great things

Depends on whom you ask, but “Great” is such a subjective adjective here that it doesn’t make sense to consider it one way or the other.

things that people want

Obviously people want the things that AI tools create. If they didn’t, they wouldn’t use them.

well-meaning

Excuse me, Sam Altman is a stand-up guy and I will not have you besmirching his name /s

Honestly my main complaint with this line is the implication that the people behind non-AI tools are any more well-meaning. I’m sure some are, but I can say the same with regard to AI. And in either case, the engineers and testers and project managers and everyone actually implementing the technology and trying to earn a paycheck? They’re well-meaning, for the most part.

but it allows for some people to type out one-liners and generate massive blobs of text at the same time that they could be doing their jobs. /codecraft

Lemmy

Edit: Oh shit. I didn’t realize this whole community is just for this… oh well.

Ah! I thought you guys wanted cheap, fast, and most importantly interpretable cell state determination from single cell sequencing data. My bad.

Speak for yourself, ai generating porn is the greatest thing since sliced bread

Until someone starts generating porn of you and your family members. It’s already a huge problem that I’m surprised nobody talks about.

should cosplay porn of celebrities be banned too?

You’re into naked people with more than the usual number of fingers? Not trying to kink shame…

The moment you look at even the “best” ones for more than a few seconds you start seeing lots of fun body horror.

Man, you haven’t seen the foot fetish images that bing can create

I have. They’re pretty mediocre in my opinion. When you zoom in it looks so gross.

Nor do I wish to.

Suit yourself, for my purposes, the original statement still stands

Yeah I was thinking to myself “how can we make being a teenager even worse for kids?”

I would disagree with the answers to all those questions

Elaborate, please.

On the environmental question, AI tools are energy agnostic. If humans using electricity can be environmentally sustainable at all, so can AI. I suspect the energy requirements are going to drop drastically as more specialized hardware is developed.

There are already a lot of great things they can do in the category of generating content for enjoyment. Both art and text based. One of the most popular twitch streamers uses a language model. Games and interactive experiences can be much more realistic and responsive now. As far as I can tell, a lot of people are benefiting from the ability to ask a question and get an expert level answer on most topics that is correct at least half the time. And this is just the infancy of the technology.

As far as well meaning people, a lot of people working on the technology are researchers and computer scientists who legitimately believe in the potential for good of the technology. Of course, there are people who don’t care and only want profit also, but that’s true of basically every company. So you could probably accurately say it’s being worked on by any type of person in that spectrum, but you can’t say the opposite and deny that well meaning people are creating it.