I’m similar but it’s a side effect of my general gluttony. I’ll watch one episode and immediately want more. I didn’t intend to wait until the season was over to binge it all, but it just sort of happened because of life being hectic right now.

I’m beautiful and tough like a diamond…or beef jerky in a ball gown.

I’m similar but it’s a side effect of my general gluttony. I’ll watch one episode and immediately want more. I didn’t intend to wait until the season was over to binge it all, but it just sort of happened because of life being hectic right now.

If it turns out to be the former, I don’t blame them.

I used to buy their stuff and use tuya-convert to flash Tasmota onto them. But they kept updating the firmware to lock that out, and I ended up returning a batch of 15 smart plugs because none of them would flash. They were too much of a PITA to try to crack open and flash the ESP8266 manually so I returned the whole batch as defective, left a scathing review, and blackballed the whole brand.

Nice. I’ve got the Anker version but it’s half the capacity at 1 KWh. It charges exclusively from 800W of PV input (though it can only handle 600W input) and can push out 2,000 W continuous and 3000 peak.

I’ve got a splitter from the PV that goes to both the Anker and a DC-DC converter which then goes to a few 12v -> USB power delivery adapters. Those can use the excess from the PV to charge power banks, phones, laptops, etc while the rest goes to the Anker (doesn’t seem to affect the MPPT unless there’s basically just no sunlight at all). Without the splitter, anything above 600W is wasted until I expand my setup later this spring.

All I can say for it is that it absolutely rocks! On sunny days, I run my entire homelab from it, my work-from-home office, charge all my devices, and run my refrigerator from it if I feel like running an extension cord). It’s setup downstairs, so I also plug my washing machine into it and can get a few loads of laundry done as well.

All from its solar input.

Solutions that work for a corporate application where all the staff know each other are unlikely to be feasible for a publicly available application with thousands of users all over the world

This is something of a hybrid. There will be both general public users as well as staff. So for staff, we could just call them or walk down the hall and verify them but the public accounts are what I’m trying to cover (and, ideally, the staff would just use the same method as the public).

Figure if an attacker attempts the ‘forgot password’ method, it’s assumed they have access to the users email.

Yep, that’s part of the current posture. If MFA is enabled on the account, then a valid TOTP code is required to complete the password reset after they use the one-time email token. The only threat vector there is if the attacker has full access to the user’s phone (and thus their email and auth app) but I’m not sure if there’s a sane way to account for that. It may also be overkill to try to account for that scenario in this project. So we’re assuming the user’s device is properly secured (PIN, biometrics, password, etc).

If you are offering TOTP only,

Presently, yes, but we’re looking to eventually support WebAuthn

or otherwise an OTP sent via SMS with a short expiration time

We’re trying to avoid 3rd party services, so something like Twilio isn’t really an option (nor Duo, etc). We’re also trying to store the minimum amount of personal info, and currently there is no reason for us to require the user’s phone number (though staff can add it if they want it to show up as a method of contact). OTP via SMS is also considered insecure, so that’s another reason I’m looking at other methods.

“backup codes” of valid OTPs that the user needs to keep safe and is obtained when first enrolling in MFA

I did consider adding that to the onboarding but I have my doubts if people will actually keep them safe or even keep them at all. It’s definitely an option, though I’d prefer to not rely on it.

So for technical, human, and logistical reasons, I’m down to the following options to reset the MFA:

I’m leaning toward #3 unless there’s a compelling reason not to.

I thought about generating a list of backup codes during the onboarding process but ruled it out because I know for a fact that people will not hold on to them.

That’s why I’m leaning more toward, and soliciting feedback for, some method of automated recovery (email token + TOTP for password resets, email token + password for MFA resets, etc). I’m trying to also avoid using security questions but haven’t closed that door entirely.

If you’re gonna repost stuff from ml at least re-upload it so I don’t have to connect to it.

<input type="text"> is suitable for political opinions.To give perspective with a 3000 mah battery I am still lasting days.

Is that connected via bluetooth or just running the LoRA radio? Curious if the V4 is any less power hungry than the V3. I never did a rundown test with one of my 3,000 mah V3 units, but my daily driver had a 2000 mah battery and barely made it 14 hours before it was throwing the battery low warning. I kept it connected to my phone the whole time under most conditions.

Same conditions but with the nRF-based T1000e, it runs for about 2 days on a 700 mAh battery AND has GPS (I didn’t have GPS on my daily driver node). The difference is amazing.

Could be any or all of that, yeah. You can also set the level of precision for your reported location, but I don’t think even the lowest precision settings would put it 1,000 miles away.

I live near-ish to an airport, and I’ll occasionally see nodes that are 1 or 2 hops and 100-200+ miles away. Best I can tell, the airborne node is legit relaying those which I think is pretty cool. Not really useful, but cool.

LoRa is a proprietary radio interface, so I don’t how how FOSS you can go with it, but the Meshtastic firmware itself is FOSS.

What are your use-cases? Are you looking for something to use as an everyday carry? An outdoor solar node to relay messages in your area? A node to use as a base station? All of the above?

For everyday carry, I semi-recently bought the SenseCap T1000e and I love it. I did a post about it here: https://startrek.website/post/34105873

Seeed (the company that makes the T1000e) also makes a nice outdoor, solar powered node: https://www.seeedstudio.com/SenseCAP-Solar-Node-P1-Pro-for-Meshtastic-LoRa-p-6412.html

They’ve also got a lot of options for various other configurations as well: https://www.seeedstudio.com/LoRa-and-Meshtastic-and-4G-c-2423.html

Those are all “turnkey” devices, but I’ve heard good things about the Heltec V4 if you want to go a more DIY route and make your own case and add your own accessories (GPS, accelerometer, etc).

Yeah, I hadn’t even heard of PF keys and naively assumed that was a different term for the function keys I knew.

Personally, I love that layout.

I’m always at a loss for what to put up as wall decorations, and I hate rats nests of cables. Win-win!

New U.S. rules will soon ban Chinese software in vehicle systems that connect to the cloud

Seems to me that the easiest way to get into compliance would be to not make the car connect to the cloud/internet. I’m gonna drive my 2017 model until I can buy a new car that isn’t a smartphone on wheels.

They’re separate queens and separate collectives/cooperatives.

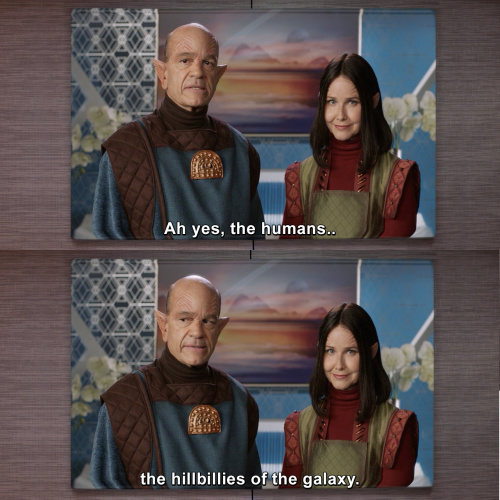

The Jurati Cooperative is, as of the end of Season 2, guarding the spatial anomaly that formed in the beginning of S2. They’re completely absent from the third season. Which I can understand since S3 was a fan-service reunion (which I loved) and there just wasn’t room in the 10 episodes for them.

The queen from S3 is the same one from VOY: Endgame and First Contact and part of the same collective since they were first introduced in TNG.

The new one affected the other one?

AFIAK, no, they had no effect on each other. The alternate timeline queen (that turned into Queen Jurati) was not the same queen seen in S3 or elsewhere. That queen was from a 2401 that no longer exists. She and her cooperative only exist because they went back in time and took the long way back to 2401.

Loops finally seems usable now. I tried the beta a while back and it was kinda “Meh” but it’s improved significantly since. And you can browse on the website now, too. I’m not into short form videos, but credit where it’s due.

Well, I do like short form videos, but I hate panning for the gems and just let my friends send me the ones that rise to top.

It’s so common for “anti-censorship” to be code for “Nazi-friendly” that I’m immediately suspicious of any platform that uses that as a selling point.

I’m similarly suspicious, but it’s not just code for “nazi-friendly” but also crackpots, maladaptives, etc. Rational people who read and say “anti-censorship” in this context know it means that it’s not beholden to corporate or government interests. But everyone else seems to want to interpret that as “I can say whatever I want! How dare you mod anything I say?! Freeze-peach, y’all!”

I wish they’d pick a different term for these non-corporate alternatives, but I don’t have a better suggestion to offer right now.

Trash? None.

Clutter / work-in-progress: No comment.

Disclaimer: : All of my LLM experience is with local models in Ollama on extremely modest hardware (an old laptop with NVidia graphics) , so I can’t speak for the technical reasons the context window isn’t infinite or at least larger on the big player’s models. My understanding is that the context window is basically its short term memory. In humans, short term memory is also fairly limited in capacity. But unlike humans, the LLM can’t really see (or hold) the big picture in its mind.

But yeah, all you said is correct. Expanding on that, if you try to get it to generate something long-form, such as a novel, it’s basically just generating infinite chapters using the previous chapter (or as much of the history fits into its context window) as reference for the next. This means, at minimum, it’s going to be full of plot holes and will never reach a conclusion unless explicitly directed to wrap things up. And, again, given the limited context window, the ending will be full of plot holes and essentially based only on the previous chapter or two.

It’s funny because I recently found an old backup drive from high school with some half-written Jurassic Park fan fiction on it, so I tasked an LLM with fleshing it out, mostly for shits and giggles. The result is pure slop that seems like it’s building to something and ultimately goes nowhere. The other funny thing is that it reads almost exactly like a season of Camp Cretaceous / Chaos Theory (the animated kids JP series) and I now fully believe those are also LLM-generated.